LowLevelParticleFilters

This is a library for state estimation, that is, given measurements $y(t)$ from a dynamical system, estimate the state vector $x(t)$. Throughout, we assume dynamics on the form

\[\begin{aligned} x(t+1) &= f(x(t), u(t), p, t, w(t))\\ y(t) &= g(x(t), u(t), p, t, e(t)) \end{aligned}\]

or the linear version

\[\begin{aligned} x(t+1) &= Ax(t) + Bu(t) + w(t)\\ y(t) &= Cx(t) + Du(t) + e(t) \end{aligned}\]

where $x$ is the state vector, $u$ an input, $p$ some form of parameters, $t$ is the time and $w,e$ are disturbances (noise). Throughout the documentation, we often call the function $f$ dynamics and the function $g$ measurement.

The dynamics above describe a discrete-time system, i.e., the function $f$ takes the current state and produces the next state. This is in contrast to a continuous-time system, where $f$ takes the current state but produces the time derivative of the state. A continuous-time system can be discretized, described in detail in Discretization.

The parameters $p$ can be anything, or left out. You may write the dynamics functions such that they depend on $p$ and include parameters when you create a filter object. You may also override the parameters stored in the filter object when you call any function on the filter object. This behavior is modeled after the SciML ecosystem.

Depending on the nature of $f$ and $g$, the best method of estimating the state may vary. If $f,g$ are linear and the disturbances are additive and Gaussian, the KalmanFilter is an optimal state estimator. If any of the above assumptions fail to hold, we may need to resort to more advanced estimators. This package provides several filter types, outlined below.

Estimator types

We provide a number of filter types

KalmanFilter. A standard Kalman filter. Is restricted to linear dynamics (possibly time varying) and Gaussian noise.SqKalmanFilter. A standard Kalman filter on square-root form (slightly slower but more numerically stable with ill-conditioned covariance).ExtendedKalmanFilter: For nonlinear systems, the EKF runs a regular Kalman filter on linearized dynamics. Uses ForwardDiff.jl for linearization (or user provided). The noise model must still be Gaussian and additive.IteratedExtendedKalmanFiltersame as EKF, but performs iteration in the measurement update for increased accuracy in the covariance update.UnscentedKalmanFilter: The Unscented Kalman filter often performs slightly better than the Extended Kalman filter but may be slightly more computationally expensive. The UKF handles nonlinear dynamics and measurement models, but still requires a Gaussian noise model (may be non additive) and still assumes that all posterior distributions are Gaussian, i.e., can not handle multi-modal posteriors.ParticleFilter: The particle filter is a nonlinear estimator. This version of the particle filter is simple to use and assumes that both dynamics noise and measurement noise are additive. Particle filters handle multi-modal posteriors.AdvancedParticleFilter: This filter gives you more flexibility, at the expense of having to define a few more functions. This filter does not require the noise to be additive and is thus the most flexible filter type.AuxiliaryParticleFilter: This filter is identical toParticleFilter, but uses a slightly different proposal mechanism for new particles.IMM: (Currently considered experimental) The Interacting Multiple Models filter switches between multiple internal filters based on a hidden Markov model. This filter is useful when the system dynamics change over time and the change can be modeled as a discrete Markov chain, i.e., the system may switch between a small number of discrete "modes".RBPF: A Rao-Blackwellized particle filter that uses a Kalman filter for the linear part of the state and a particle filter for the nonlinear part.

Functionality

This package provides

- Filtering, estimating $x(t)$ given measurements up to and including time $t$. We call the filtered estimate $x(t|t)$ (read as $x$ at $t$ given $t$).

- Smoothing, estimating $x(t)$ given data up to $T > t$, i.e., $x(t|T)$.

- Parameter estimation.

All filters work in two distinct steps.

- The prediction step (

predict!). During prediction, we use the dynamics model to form $x(t|t-1) = f(x(t-1), ...)$ - The correction step (

correct!). In this step, we adjust the predicted state $x(t|t-1)$ using the measurement $y(t)$ to form $x(t|t)$.

The following two exceptions to the above exist

- The

IMMfilter has two additional steps,combine!andinteract! - The

AuxiliaryParticleFiltermakes use of the next measurement in the dynamics update, and thus only has anupdate!method.

In general, all filters represent not only a point estimate of $x(t)$, but a representation of the complete posterior probability distribution over $x$ given all the data available up to time $t$. One major difference between different filter types is how they represent these probability distributions.

Particle filter

A particle filter represents the probability distribution over the state as a collection of samples, each sample is propagated through the dynamics function $f$ individually. When a measurement becomes available, the samples, called particles, are given a weight based on how likely the particle is given the measurement. Each particle can thus be seen as representing a hypothesis about the current state of the system. After a few time steps, most weights are inevitably going to be extremely small, a manifestation of the curse of dimensionality, and a resampling step is incorporated to refresh the particle distribution and focus the particles on areas of the state space with high posterior probability.

Defining a particle filter (ParticleFilter) is straightforward, one must define the distribution of the noise df in the dynamics function, dynamics(x,u,p,t) and the noise distribution dg in the measurement function measurement(x,u,p,t). Both of these noise sources are assumed to be additive, but can have any distribution (see AdvancedParticleFilter for non-additive noise). The distribution of the initial state estimate d0 must also be provided. In the example below, we use linear Gaussian dynamics so that we can easily compare both particle and Kalman filters. (If we have something close to linear Gaussian dynamics in practice, we should of course use a Kalman filter and not a particle filter.)

using LowLevelParticleFilters, LinearAlgebra, StaticArrays, Distributions, PlotsDefine problem

nx = 2 # Dimension of state

nu = 1 # Dimension of input

ny = 1 # Dimension of measurements

N = 500 # Number of particles

const dg = MvNormal(ny,0.2) # Measurement noise Distribution

const df = MvNormal(nx,0.1) # Dynamics noise Distribution

const d0 = MvNormal(randn(nx),2.0) # Initial state DistributionDefine linear state-space system (using StaticArrays for maximum performance)

const A = SA[0.97043 -0.097368

0.09736 0.970437]

const B = SA[0.1; 0;;]

const C = SA[0 1.0]Next, we define the dynamics and measurement equations, they both take the signature (x,u,p,t) = (state, input, parameters, time)

dynamics(x,u,p,t) = A*x .+ B*u

measurement(x,u,p,t) = C*x

vecvec_to_mat(x) = copy(reduce(hcat, x)') # Helper functionthe parameter p can be anything, and is often optional. If p is not provided when performing operations on filters, any p stored in the filter objects (if supported) is used. The default if none is provided and none is stored in the filter is p = LowLevelParticleFilters.NullParameters().

We are now ready to define and use a filter

pf = ParticleFilter(N, dynamics, measurement, df, dg, d0)ParticleFilter{PFstate{StaticArraysCore.SVector{2, Float64}, Float64}, typeof(Main.dynamics), typeof(Main.measurement), Distributions.ZeroMeanIsoNormal{Tuple{Base.OneTo{Int64}}}, Distributions.ZeroMeanIsoNormal{Tuple{Base.OneTo{Int64}}}, Distributions.IsoNormal, DataType, Random.Xoshiro, LowLevelParticleFilters.NullParameters}

state: PFstate{StaticArraysCore.SVector{2, Float64}, Float64}

dynamics: dynamics (function of type typeof(Main.dynamics))

measurement: measurement (function of type typeof(Main.measurement))

dynamics_density: Distributions.ZeroMeanIsoNormal{Tuple{Base.OneTo{Int64}}}

measurement_density: Distributions.ZeroMeanIsoNormal{Tuple{Base.OneTo{Int64}}}

initial_density: Distributions.IsoNormal

resample_threshold: Float64 0.1

resampling_strategy: LowLevelParticleFilters.ResampleSystematic <: LowLevelParticleFilters.ResamplingStrategy

rng: Random.Xoshiro

p: LowLevelParticleFilters.NullParameters LowLevelParticleFilters.NullParameters()

threads: Bool false

Ts: Float64 1.0

nu: Int64 -1

ny: Int64 1

With the filter in hand, we can simulate from its dynamics and query some properties

du = MvNormal(nu,1.0) # Random input distribution for simulation

xs,u,y = simulate(pf,200,du) # We can simulate the model that the pf represents

pf(u[1], y[1]) # Perform one filtering step using input u and measurement y

particles(pf) # Query the filter for particles, try weights(pf) or expweights(pf) as well

x̂ = weighted_mean(pf) # using the current state2-element Vector{Float64}:

-0.32294485318841326

0.8792889957040947If you want to perform batch filtering using an existing trajectory consisting of vectors of inputs and measurements, try any of the functions forward_trajectory, mean_trajectory:

sol = forward_trajectory(pf, u, y) # Filter whole trajectories at once

x̂,ll = mean_trajectory(pf, u, y)

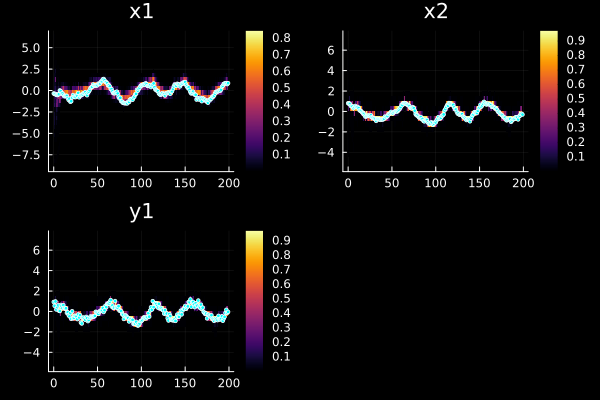

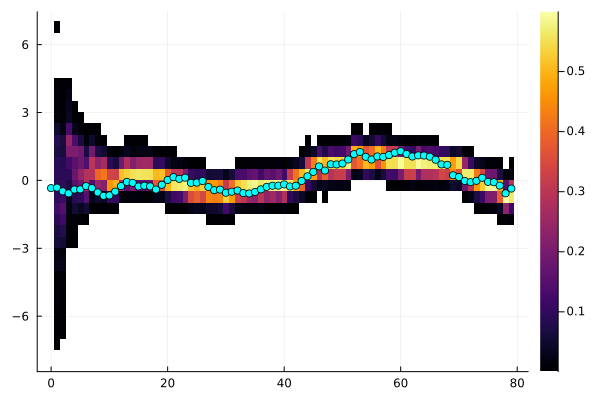

plot(sol, xreal=xs, markersize=2)

u ad y are then assumed to be vectors of vectors. StaticArrays is recommended for maximum performance.

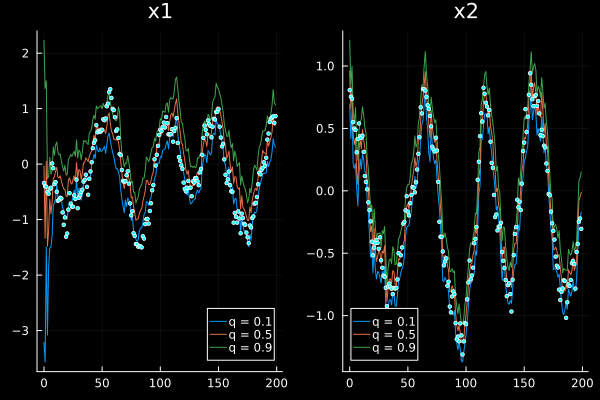

We can also plot weighted quantiles instead of 2D histograms by providing a vector of desired quantiles through the q keyword argument

plot(sol, xreal=xs, markersize=2, q=[0.1, 0.5, 0.9], ploty=false, legend=true)

If MonteCarloMeasurements.jl is loaded, you may transform the output particles to Matrix{MonteCarloMeasurements.Particles} with the layout T × n_state using Particles(x,we). Internally, the particles are then resampled such that they all have unit weight. This is conventient for making use of the plotting facilities of MonteCarloMeasurements.jl.

For a full usage example, see the benchmark section below or example_lineargaussian.jl

Resampling

The particle filter will perform a resampling step whenever the distribution of the weights has become degenerate. The resampling is triggered when the effective number of samples is smaller than pf.resample_threshold $\in [0, 1]$, this value can be set when constructing the filter. How the resampling is done is governed by pf.resampling_strategy, we currently provide ResampleSystematic <: ResamplingStrategy as the only implemented strategy. See https://en.wikipedia.org/wiki/Particle_filter for more info.

Particle Smoothing

Smoothing is the process of finding the best state estimate given both past and future data. Smoothing is thus only possible in an offline setting. This package provides a particle smoother, based on forward filtering, backward simulation (FFBS), example usage follows:

N = 2000 # Number of particles

T = 80 # Number of time steps

M = 100 # Number of smoothed backwards trajectories

pf = ParticleFilter(N, dynamics, measurement, df, dg, d0)

du = MvNormal(nu,1) # Control input distribution

x,u,y = simulate(pf,T,du) # Simulate trajectory using the model in the filter

tosvec(y) = reinterpret(SVector{length(y[1]),Float64}, reduce(hcat,y))[:] |> copy

x,u,y = tosvec.((x,u,y)) # It's good for performance to use StaticArrays to the extent possible

xb,ll = smooth(pf, M, u, y) # Sample smoothing particles

xbm = smoothed_mean(xb) # Calculate the mean of smoothing trajectories

xbc = smoothed_cov(xb) # And covariance

xbt = smoothed_trajs(xb) # Get smoothing trajectories

xbs = [diag(xbc) for xbc in xbc] |> vecvec_to_mat .|> sqrt

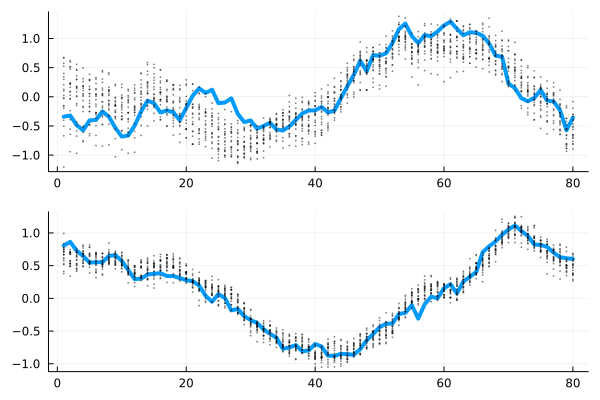

plot(xbm', ribbon=2xbs, lab="PF smooth")

plot!(vecvec_to_mat(x), l=:dash, lab="True")We can plot the particles themselves as well

downsample = 5

plot(vecvec_to_mat(x), l=(4,), layout=(2,1), show=false)

scatter!(xbt[1, 1:downsample:end, :]', subplot=1, show=false, m=(1,:black, 0.5), lab="")

scatter!(xbt[2, 1:downsample:end, :]', subplot=2, m=(1,:black, 0.5), lab="")

Kalman filter

The KalmanFilter (wiki) assumes that $f$ and $g$ are linear functions, i.e., that they can be written on the form

\[\begin{aligned} x(t+1) &= Ax(t) + Bu(t) + w(t)\\ y(t) &= Cx(t) + Du(t) + e(t) \end{aligned}\]

for some matrices $A,B,C,D$ where $w \sim N(0, R_1)$ and $e \sim N(0, R_2)$ are zero mean and Gaussian. The Kalman filter represents the posterior distributions over $x$ by the mean and a covariance matrix. The magic behind the Kalman filter is that linear transformations of Gaussian distributions remain Gaussian, and we thus have a very efficient way of representing them.

A Kalman filter is easily created using the constructor KalmanFilter. Many of the functions defined for particle filters, are defined also for Kalman filters, e.g.:

R1 = cov(df)

R2 = cov(dg)

kf = KalmanFilter(A, B, C, 0, R1, R2, d0)

sol = forward_trajectory(kf, u, y) # sol contains filtered state, predictions, pred cov, filter cov, loglikIt can also be called in a loop like the pf above

for t = 1:T

kf(u,y) # Performs both correct! and predict!

# alternatively

ll, e = correct!(kf, y, nothing, t) # Returns loglikelihood and prediction error (plus other things if you want)

x = state(kf) # Access the state estimate

R = covariance(kf) # Access the covariance of the estimate

predict!(kf, u, nothing, t)

endThe matrices in the Kalman filter may be time varying, such that A[:, :, t] is $A(t)$. They may also be provided as functions on the form $A(t) = A(x, u, p, t)$. This works for both dynamics and covariance matrices. When providing functions, the dimensions of the state, input and output, nx, nu, ny, must be provided as keyword arguments to the filter constructor since these cannot be inferred from the function signature.

The numeric type used in the Kalman filter is determined from the mean of the initial state distribution, so make sure that this has the correct type if you intend to use, e.g., Float32 or ForwardDiff.Dual for automatic differentiation.

Smoothing using KF

Kalman filters can also be used for smoothing

kf = KalmanFilter(A, B, C, 0, cov(df), cov(dg), d0)

smoothsol = smooth(kf, u, y) # Returns a smoothing solution including smoothed state and smoothed covPlot and compare PF and KF

# plot(smoothsol) The smoothing solution object can also be plotted directly

plot(vecvec_to_mat(smoothsol.xT), lab="Kalman smooth", layout=2)

plot!(xbm', lab="pf smooth")

plot!(vecvec_to_mat(x), lab="true")Kalman filter tuning tutorial

The tutorial "How to tune a Kalman filter" details how to figure out appropriate covariance matrices for the Kalman filter, as well as how to add disturbance models to the system model.

Unscented Kalman Filter

The UnscentedKalmanFilter represents posterior distributions over $x$ as Gaussian distributions just like the KalmanFilter, but propagates them through a nonlinear function $f$ by a deterministic sampling of a small number of particles called sigma points (this is referred to as the unscented transform). This UKF thus handles nonlinear functions $f,g$, but only Gaussian disturbances and unimodal posteriors. The UKF will by default treat the noise as additive, but by using the augmented UKF form, non-additive noise may be handled as well. See the docstring of UnscentedKalmanFilter for more details.

The UKF takes the same arguments as a regular KalmanFilter, but the matrices defining the dynamics are replaced by two functions, dynamics and measurement, working in the same way as for the ParticleFilter above (unless the augmented form is used).

ukf = UnscentedKalmanFilter(dynamics, measurement, cov(df), cov(dg), MvNormal(SA[1.,1.]); nu, ny)UnscentedKalmanFilter{false,false,false,false}

Inplace dynamics: false

Inplace measurement: false

Augmented dynamics: false

Augmented measurement: false

nx: 2

nu: 1

ny: 1

Ts: 1.0

t: 0

dynamics: dynamics

measurement_model: UKFMeasurementModel{false, false, typeof(Main.measurement), PDMats.ScalMat{Float64}, typeof(-), typeof(weighted_mean), typeof(weighted_cov), typeof(LowLevelParticleFilters.cross_cov), LowLevelParticleFilters.SigmaPointCache{Vector{StaticArraysCore.SVector{2, Float64}}, Vector{StaticArraysCore.SVector{1, Float64}}}, TrivialParams})

R1: [0.01 0.0; 0.0 0.01]

d0: Distributions.MvNormal{Float64, PDMats.PDiagMat{Float64, StaticArraysCore.SVector{2, Float64}}, FillArrays.Zeros{Float64, 1, Tuple{Base.OneTo{Int64}}}}(

dim: 2

μ: Zeros(2)

Σ: [1.0 0.0; 0.0 1.0]

)

predict_sigma_point_cache: LowLevelParticleFilters.SigmaPointCache{Vector{StaticArraysCore.SVector{2, Float64}}, Vector{StaticArraysCore.SVector{2, Float64}}})

x: [0.0, 0.0]

R: [1.0 0.0; 0.0 1.0]

p: NullParameters()

reject: nothing

state_mean: weighted_mean

state_cov: weighted_cov

cholesky!: cholesky!

names: SignalNames(["x1", "x2"], ["u1"], ["y1"], "UKF")

weight_params: TrivialParams()

R1x: nothing

If your function dynamics describes a continuous-time ODE, do not forget to discretize it before passing it to the UKF. See Discretization for more information.

The UnscentedKalmanFilter has many customization options, see the docstring for more details. In particular, the UKF may be created with a linear measurement model as an optimization.

Extended Kalman Filter

The ExtendedKalmanFilter (EKF) is similar to the UKF, but propagates Gaussian distributions by linearizing the dynamics and using the formulas for linear systems similar to the standard Kalman filter. This can be slightly faster than the UKF (not always), but also less accurate for strongly nonlinear systems. The linearization is performed automatically using ForwardDiff.jl unless the user provides Jacobian functions that compute $A$ and $C$. In general, the UKF is recommended over the EKF unless the EKF is faster and computational performance is the top priority.

The EKF constructor has the following two signatures

ExtendedKalmanFilter(dynamics, measurement, R1, R2, d0=MvNormal(R1); nu::Int, p = LowLevelParticleFilters.NullParameters(), α = 1.0, check = true, Ajac = nothing, Cjac = nothing)

ExtendedKalmanFilter(kf, dynamics, measurement; Ajac = nothing, Cjac = nothing)The first constructor takes all the arguments required to initialize the extended Kalman filter, while the second one takes an already defined standard Kalman filter. using the first constructor, the user must provide the number of inputs to the system, nu.

where kf is a standard KalmanFilter from which the covariance properties are taken.

If your function dynamics describes a continuous-time ODE, do not forget to discretize it before passing it to the UKF. See Discretization for more information.

AdvancedParticleFilter

The AdvancedParticleFilter works very much like the ParticleFilter, but admits more flexibility in its noise models.

The AdvancedParticleFilter type requires you to implement the same functions as the regular ParticleFilter, but in this case you also need to handle sampling from the noise distributions yourself. The function dynamics must have a method signature like below. It must provide one method that accepts state vector, control vector, parameter, time and noise::Bool that indicates whether or not to add noise to the state. If noise should be added, this should be done inside dynamics An example is given below

using Random

const rng = Random.Xoshiro()

function dynamics(x, u, p, t, noise=false) # It's important that `noise` defaults to false

x = A*x .+ B*u # A simple linear dynamics model in discrete time

if noise

x += rand(rng, df) # it's faster to supply your own rng

end

x

endThe measurement_likelihood function must have a method accepting state, input, measurement, parameter and time, and returning the log-likelihood of the measurement given the state, a simple example below:

function measurement_likelihood(x, u, y, p, t)

logpdf(dg, C*x-y) # An example of a simple linear measurement model with normal additive noise

endThis gives you very high flexibility. The noise model in either function can, for instance, be a function of the state, something that is not possible for the simple ParticleFilter. To be able to simulate the AdvancedParticleFilter like we did with the simple filter above, the measurement method with the signature measurement(x,u,p,t,noise=false) must be available and return a sample measurement given state (and possibly time). For our example measurement model above, this would look like this

# This function is only required for simulation

measurement(x, u, p, t, noise=false) = C*x + noise*rand(rng, dg)We now create the AdvancedParticleFilter and use it in the same way as the other filters:

apf = AdvancedParticleFilter(N, dynamics, measurement, measurement_likelihood, df, d0)

sol = forward_trajectory(apf, u, y, ny) # Perform batch filteringParticleFilteringSolution{AdvancedParticleFilter{PFstate{StaticArraysCore.SVector{2, Float64}, Float64}, typeof(Main.dynamics), typeof(Main.measurement), typeof(Main.measurement_likelihood), Distributions.ZeroMeanIsoNormal{Tuple{Base.OneTo{Int64}}}, Distributions.IsoNormal, DataType, Random.Xoshiro, LowLevelParticleFilters.NullParameters}, Vector{StaticArraysCore.SVector{1, Float64}}, Vector{StaticArraysCore.SVector{1, Float64}}, Matrix{StaticArraysCore.SVector{2, Float64}}, Matrix{Float64}, Matrix{Float64}, Float64}(AdvancedParticleFilter{PFstate{StaticArraysCore.SVector{2, Float64}, Float64}, typeof(Main.dynamics), typeof(Main.measurement), typeof(Main.measurement_likelihood), Distributions.ZeroMeanIsoNormal{Tuple{Base.OneTo{Int64}}}, Distributions.IsoNormal, DataType, Random.Xoshiro, LowLevelParticleFilters.NullParameters}

state: PFstate{StaticArraysCore.SVector{2, Float64}, Float64}

dynamics: dynamics (function of type typeof(Main.dynamics))

measurement: measurement (function of type typeof(Main.measurement))

measurement_likelihood: measurement_likelihood (function of type typeof(Main.measurement_likelihood))

dynamics_density: Distributions.ZeroMeanIsoNormal{Tuple{Base.OneTo{Int64}}}

initial_density: Distributions.IsoNormal

resample_threshold: Float64 0.5

resampling_strategy: LowLevelParticleFilters.ResampleSystematic <: LowLevelParticleFilters.ResamplingStrategy

rng: Random.Xoshiro

p: LowLevelParticleFilters.NullParameters LowLevelParticleFilters.NullParameters()

threads: Bool false

Ts: Float64 1.0

nu: Int64 -1

ny: Int64 -1

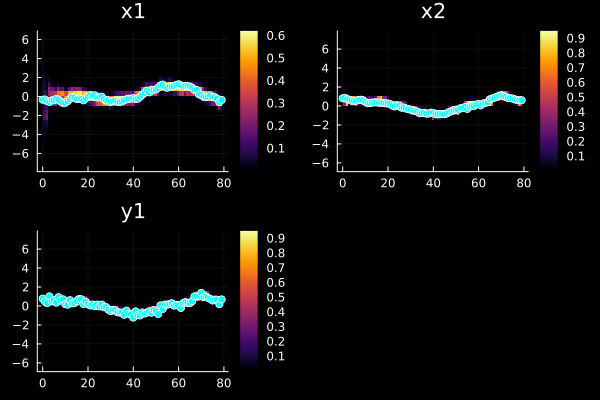

, StaticArraysCore.SVector{1, Float64}[[0.6537567983138092], [0.1461192700147572], [0.03698375156560759], [0.5602292719104064], [0.002711385683172688], [1.216368988016474], [0.9148009615031674], [-0.3524653231473113], [0.8223664116274871], [0.04215403951163809] … [-1.0337887519686355], [-0.33706629320751846], [-0.24191707955976194], [0.5865304145450738], [-0.22781307799099626], [0.5525384739112486], [-1.3079141513563888], [-2.151192338548518], [1.0228100007312686], [0.014585535599645521]], StaticArraysCore.SVector{1, Float64}[[0.7767556641521597], [0.4734320202298869], [0.3047260433147342], [1.0161224847386676], [0.49643174646668764], [0.5947618048493193], [0.3559927762544035], [0.9272352356868394], [0.5756490298157972], [0.7006036187518988] … [1.3748947641819471], [0.9213215943732835], [1.003610964645038], [0.8471612004456421], [0.7415462382321956], [0.580218811737972], [0.6747268036979963], [0.6616471028556352], [0.1929694248892282], [0.6924582825132476]], StaticArraysCore.SVector{2, Float64}[[-1.6188001359930264, -0.17065631039577234] [-2.9270820686102264, 0.3124152069267266] … [-0.7261991020343023, 1.0321674582230809] [-0.43879348265027895, 0.4948472404372535]; [0.44859247311722755, 0.09404215050870057] [-3.010390023668136, 0.39768936449234304] … [-0.7017463590604556, 0.5127455988439429] [-0.4804364747070058, 0.3777794173526398]; … ; [-5.937560962597444, 1.4342374394599435] [-2.161289551091557, 0.33967292916115854] … [-0.4640915337591551, 0.6413691090570216] [-0.8838208941829011, 0.5964581072961189]; [-1.6936171458445115, 3.324782077357008] [-1.8814771031369846, 0.26362535450934554] … [-0.795497087414882, 0.6746185735372018] [-0.7116111115882349, 0.7063963235894426]], [-16.542340735037577 -7.135122034412535 … -15.875917935921928 -6.89156903863804; -11.1486943874699 -6.882753731060927 … -6.997660470039002 -7.641227341493135; … ; -10.72600117685087 -7.03468553787788 … -8.282056240259642 -6.518642909780029; -86.47795514594696 -7.361277319593363 … -9.281764423441519 -6.4058708516215574], [6.542635126870922e-8 0.0007966285608558123 … 1.2740205853136065e-7 0.0010163179471856544; 1.4394067902541862e-5 0.0010253167076326797 … 0.0009140178382286743 0.00048023867335206385; … ; 2.196629825082086e-5 0.0008807950980779561 … 0.0002530164022628746 0.0014756703619180224; 2.773966895098824e-38 0.0006353863495532838 … 9.310669880830664e-5 0.0016518310953716878], -3.9679444594168913)plot(sol, xreal=x)

We can even use this type as an AuxiliaryParticleFilter

apfa = AuxiliaryParticleFilter(apf)

sol = forward_trajectory(apfa, u, y, ny)

plot(sol, dim=1, xreal=x) # Same as above, but only plots a single dimension

See the tutorials section for more advanced examples, including state estimation for DAE (Differential-Algebraic Equation) systems.

Troubleshooting and tuning

Particle filters

Tuning a particle filter can be quite the challenge. To assist with this, we provide som visualization tools

debugplot(pf,u[1:20],y[1:20], runall=true, xreal=x[1:20])Time Surviving Effective nbr of particles

--------------------------------------------------------------

t: 1 1.000 2000.0

t: 2 1.000 297.5

t: 3 0.151 2000.0

t: 4 1.000 1343.4

t: 5 1.000 402.4

t: 6 1.000 365.1

t: 7 1.000 267.9

t: 8 1.000 201.4

t: 9 0.168 2000.0

t: 10 1.000 1721.5

t: 11 1.000 1431.1

t: 12 1.000 795.8

t: 13 1.000 397.7

t: 14 1.000 419.5

t: 15 1.000 325.2

t: 16 1.000 271.7

t: 17 1.000 226.2

t: 18 0.215 2000.0

t: 19 1.000 1682.9

t: 20 1.000 1080.8The plot displays all state variables and all measurements. The heatmap in the background represents the weighted particle distributions per time step. For the measurement sequences, the heatmap represent the distributions of predicted measurements. The blue dots corresponds to measured values. In this case, we simulated the data and we had access to the state as well, if we do not have that, just omit xreal. You can also manually step through the time-series using

commandplot(pf,u,y; kwargs...)

For options to the debug plots, see ?pplot.

Troubleshooting Kalman filters

A commonly occurring error is "Cholesky factorization failed", which may occur due to several different reasons

- The dynamics is diverging and the covariance matrices end up with NaNs or Infs. If this is the case, verify that the dynamics is correctly implemented and that the integration is sufficiently accurate, especially if using a fixed-step integrator like any of those from SeeToDee.jl.

- The covariance matrix is poorly conditioned and numerical issues make causes it to lose positive definiteness. This issue is rare, but can be mitigated by using the

SqKalmanFilter, rescaling the dynamics or by using a different cholesky factorization method (available in UKF only).

Tuning noise parameters through optimization

See examples in Parameter Estimation.

Tuning through simulation

It is possible to sample from the Bayesian model implied by a filter and its parameters by calling the function simulate. A simple tuning strategy is to adjust the noise parameters such that a simulation looks "similar" to the data, i.e., the data must not be too unlikely under the model.

Videos

Several video tutorials using this package are available in the playlists

Some examples featuring this package in particular are

Using an optimizer to optimize the likelihood of an UnscentedKalmanFilter:

Estimation of time-varying parameters:

Adaptive control by means of estimation of time-varying parameters: